Contextualising web archiving

The speed at which the internet became a fundamental tool of contemporary life and culture is matched only by the rate at which online information can be changed and disappear. As the internet has become a resource to understand contemporary social history, web archiving is the process of capturing and preserving for future research how this data is created, displayed and stored on the internet. While web archiving has become the preferred method of capturing this information, it is key to understand that because of the fast-changing nature of the internet and the technologies used, methodologies must also be able to quickly evolve and expand. In the words of Research Data Librarian Alex Ball, ‘perfecting the process [of web archiving] is something of a moving target, both in terms of the quantities involved and the sophistication and complexity of the subject material’.1 In her 2013 web archiving report for the Digital Preservation Coalition, Maureen Pennock states that the pace and ease at which the internet changes is a threat not only to ‘our digital cultural memory, its technical legacy, evolution and our social history’, but also to ‘organisational accountability’.2 This is especially true in the case of public records bodies like Tate, which have an obligation to keep records of all their activities as part of the Public Records Act (1958).3

Beyond authenticity and integrity, the Digital Preservation Coalition also highlights other concerns around web archiving in the context of institutional recordkeeping, including ‘the issue of selection (i.e. which websites to archive), a seemingly straightforward task which is complicated by the complex inter-relationships shared by most websites that make it difficult to set boundaries’.4 To this we can also add the question of how often these websites should be archived, which then raises issues of capacity and infrastructure. This also links back to the fact that online information can be subject to rapid change, with link rot threatening its loss.5 We will return to this, in the context of Tate’s website and its institutional recordkeeping obligations later in the text.

Tate actively used microsites from 2000 to 2012.6 These are smaller, additional websites, created for specific purposes that sit under a main website. Tate’s Intermedia Art web pages, actively maintained between 2004 and 2012, are an example of a microsite. In addition to Tate Britain, Tate Modern, Tate Liverpool and Tate St Ives, the intention was for Tate Online to serve as the museum’s fifth gallery, putting the collection and institutional practices front and centre.7 The microsites served as areas dedicated to specific practices or exhibitions and, like the Intermedia Art microsite, offered the opportunity to experiment with new technologies and interactivity.8 Tate’s use of microsites was ceased when the website was redesigned in 2015. They were moved onto a secondary server and several of them stopped being navigable from the main site.9

In the context of the net art works Tate commissioned between 2000 and 2012, the internet has been used as both a site-specific practice and tool to extend artists’ networks, enabling wider distribution, communication and collaboration; but the Intermedia Art microsite also served as a vehicle for exhibition and interpretation. To archive such a microsite effectively means capturing its interactivity and functionality beyond the informational and maintaining its integrity and presentation. Screenshots of informational content would not capture the interactivity which was key in its creation and function. A foundational principle of archiving is preserving the context in which the information or record was created.10 When archiving websites this includes capturing how the website worked and where it sat within the structure of any other larger site, and this is especially pertinent in this case as Intermedia Art is a microsite.

The Internet Archive, founded in 1996, has led the way developing tools and technical solutions for web archiving, including web crawlers and their own repository the Wayback Machine.11 In the UK, the UK Government Web Archive captures, preserves, and makes available whole websites and all social media produced by government bodies (and public records bodies, such as Tate). The UK Web Archive, a collaboration between all the UK legal deposit libraries, also undertakes an automated crawl of all UK-based websites once a year.12 While an individual web page may contain the informational content to be archived, by capturing whole websites, web archives are following archival principles and capturing the context that gives archival records their value as records. In a physical archive, the reason that a piece of information can be trusted is because it is contextualised by the other information in that archive – it is part of a collection of records; we understand how the information was created because of the context and provenance of the whole collection. They tell how, why and by whom a record was created, thus giving the records their evidentiary value. A single artwork on the Intermedia Art microsite stands alone, but we understand it as part of a commissioned series because of the other artworks and pages on the microsite; we understand that it was commissioned by Tate because of its relationship to the structure of the overarching website. The UK Web Archive also curates collections of websites dedicated to specialist, specific or themed topics, which places value on content rather than the context of creation. This troubles traditional archival principles of provenance but is an example of practice adapting to the creation and use of new, digital information and resources. Other organisations such as the International Internet Preservation Consortium (IIPC) and the UK’s Digital Preservation Coalition (DPC) offer guidance and collaborative research into the best ways to archive the internet.

The National Archives Web Archiving Guidance (2011) highlights three technical methods for web archiving.13 The first is ‘client-side web archiving’, which employs a crawler to work within the boundaries of a domain, mimicking the behaviour of a user, capturing links and other media within that domain. This is the most commonly used method for government web archiving initiatives and is also used by the Internet Archive.14 The second, ‘transaction-based’ web archiving is usually implemented on a case-by-case basis, to capture the information that is fundamental to a website, but inaccessible to crawlers. The third, ‘server-side’ web archiving creates an entire disc-image directly from the server, providing all the software and data needed to reactivate any dynamic content such as videos or audio.15 To create a disc-image is to clone all of the data, content and the structure of digital information; therefore, in this web archiving context, it would also include the information about how the website was originally built and the technologies used. Capturing the disc-image creates more opportunity to contain the website and all of its information and emulate expired technologies and would give researchers the best understanding of how the original website looked and functioned. The transaction-based and server-side methods require active collaboration with the servers that host the websites and are therefore not viable for mass indexing or captures.

While crawlers archive what is available from the client-side at the point of capture, the other two methods are labour intensive options and require an ongoing commitment to an active, in-house digital preservation strategy. Institutions can also nominate websites to be captured by the UK Government Web Archive and the Open UK Web Archive at the British Library.16 As a public records body, Tate’s website has been captured by the UK Government Web Archive since the archive’s inception in 2004, and even earlier captures can be found on the Wayback Machine, which also continues to capture Tate’s website. The crawlers and captures do not keep up with the rate at which Tate updates its website – the National Archives describes these captures as ‘snapshots’.17 While not every version or update of Tate’s website needs to be captured to provide an accurate record of its online activities, our research into the historic captures shows the limitation of crawlers in capturing older software such as Java or (as of December 2020) Flash. This has resulted in some net artworks, such as Golan Levin’s The Dumpster 2006, having never been completely captured.18 That crawlers are not able to capture some of the content that constitutes these artworks creates gaps in our understanding and experience.

Why archive the Intermedia Art microsite

Launched in 1998, Tate’s website is an important part of its identity as an art museum.19 In 2001 Tate rebranded its website as Tate Online with the intention of making the website take on the role of a fifth gallery. The website hosted a whole programme of contributions from all departments including ‘extensive collection and archive displays, webcasts, magazine articles and e-learning modules’.20 BT renewed their sponsorship in 2006, and virtually curated exhibition initiatives like Explore Tate Britain and Explore Tate Modern and the net art commissions put Tate’s collection and practices to a global audience via the website.21

Fig.1

Screenshot of a Wayback Machine capture of the Net Art commissions as they linked through from the Tate homepage in 2007, before the website was redesigned and the Intermedia microsite was created in 2008

Source: Wayback Machine

Digital image © Tate

Fig.2

A National Archives capture of the Intermedia Art microsite shortly after its creation

Source: National Archives

Digital image © Tate

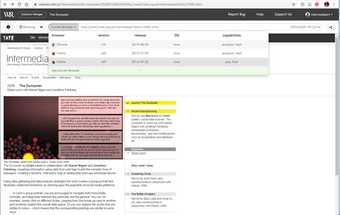

Fig.3

The most recent National Archives capture of the Intermedia Art microsite at the time of writing, from 9 February 2017

Source: National Archives

Digital image © Tate

Artwork © Steina

Rather than the links to the commissioned net art works appearing on Tate’s homepage as they had done previously (fig.1), the Intermedia Art microsite (fig.2) was created in 2008 to bring all the commissions, texts and future programming together, and has remained relatively unchanged since the project ended in 2012 (fig.3). The microsite placed all the previous commissions and events under a link titled ‘archive’, while the current programme had its own link. Within the Intermedia Art microsite, the use of the word ‘archive’ speaks not only to the impermanence of digital material by giving it the historic grounding that the word implies, but also recognises the future programming in the context of what had come before. The relative ease of collecting and moving digital material troubles the definition of an archive that emerges from archival practice – where traditional archival documents gain meaning by preserving the context and intention of their creation, a collection groups information together for other purposes.22 This can be seen in the UK Web Archive, but we could then think of the Intermedia Art microsite as an ‘archive’ in terms of the more established, traditional definition.23

Each part of the microsite has a clear provenance; we know the author or artist of each element under the umbrella of the net art team, and we know it was created with the intention of being used online. Collectively, these elements gain context. Provenance and context are fundamental aspects of a record. A collection of records linked by provenance and context becomes an archive. This is compounded by the use of the word archive in a digital or computing context – an email archive, for example. The Intermedia Art microsite has created such a store of digital information. It has also archived the information in the traditional archival sense of the word. Upon discovering that almost all of Tate’s institutional records from the net art programme were missing, it took on a new significance to the research team as it now held the only available information in one readily accessible place. Therefore, the Intermedia Art microsite’s ability to hold the information and make it available – like an archive – is more closely aligned to how we have used it in the project, than how we might have used or viewed it simply as an output of the programme had the other net art records not been lost.

This ‘archive’ has proven to be a valuable resource in our research for this case study, not only in experiencing the artworks but in comprehending and tracking the changes in technology and supported software such as Flash that many of the artworks require to run. The microsite currently sits on a server that is no longer maintained and will eventually be decommissioned. Some artworks within it, such as Shilpa Gupta’s Blessed Bandwidth 2003,are no longer accessible because the artist has not maintained the domains; some, such as Golan Levin’s The Dumpster 2006, rely on software like Java that has already stopped being supported by the majority of contemporary browsers.24 A key aspect of our research has been the legacy of these commissions, and in an archival context this has extended to Tate’s own relationship with the internet, its website and its own records. Archiving the website as it exists now, with its broken links, gaps and artworks that are no longer viable is an authentic insight of how we came to find the website. Tate does not archive its own website in-house. The UK Government Web Archive has a significant number of captures, or ‘snapshots’ of the Intermedia Art microsite, but it does not capture any external links beyond the specific URL. The crawler software used by the UK Government Web archive has also not captured some types of content, such as videos, which are key to the ‘interview’ section of the microsite. These captures therefore leave gaps and missing contextual information.

When used in collaboration with the Intermedia Art microsite itself and the Wayback Machine captures (alongside our interviews with former Tate staff), we have been able to piece together how the net art commissions were presented on Tate’s website, and how the microsite unfolded in its four active years (2008–12). However, knowing that Flash will no longer be supported after December 2020, and that updated browsers will not be able to access many of the artworks on the via these existing captures has meant that these archived versions risk becoming an ineffective representation of the Intermedia Art microsite and the artworks.

The argument for the Intermedia Art microsite being the first website archived and made available via Tate’s Public Record collection is rooted in a question: why this website – why is it different? A number of differences have been drawn upon. First, looking through Tate’s Public Records collection for examples of other commissioning programmes – in the context of internet art commissions – this website becomes a record. It is the documentation, the catalogue, interpretation and exhibition of the programme all in one. We should keep a record of such activities as we would for any other programme at Tate. As previously mentioned, we discovered early on in our research that almost all of the records of the net art commissions have been lost, making the preservation of such a record even more important. As none of these artworks were, at least at the start of our research, in Tate’s collection, this website is now the only record of how this programme unfolded.25 Archiving the website is part of a wider strategy to reconstitute the missing net art records.

Second, while various web archives have a significant number of captures of the microsite, archiving it in-house will allow us to set the boundaries of the capture. This would include archiving the pages included by the artists that fall outside of the Tate URL, which would form the richest capture of the Intermedia Art microsite. Finally, this case study has been focused on developing Tate’s capacity to collect internet art, and this has extended to Tate’s archival collecting practices. The website quickly became a very important resource in our research, and its entering Tate’s Public Record collection is not only testing our methodology and the tools for archiving dynamic, internet-based content; it is also an active representation of the work being undertaken by the TiBM and Research teams.

How to archive the website

The research for this case study has spread across the Public Records, Tate Digital, Technology and the TiBM teams as we gauge Tate’s ongoing capacity to collect internet and software-based art. The mutual interest in documentation tools, web archiving, and preservation strategies and technologies led to much of my research being undertaken in collaboration with the TiBM team.

Collaboratively, we decided on an approach that combines what the National Archives identifies as a client/transactional approach and a server-side preservation. We wanted to capture the Intermedia Art microsite as it exists now on the Tate server as an authentic representation of how we found the site, including broken links and missing information. One method would be taking a disc-image from the Tate server which would allow the TiBM team to provide access to an emulated version of the website and in some cases test rebuilding artworks. While this whole process has been driven by a need to capture the website before the server is eventually decommissioned, there was also a keen desire to keep the Intermedia commissions readily available to researchers and, due to the nature of the commissions, online. Therefore, we also needed to find a tool that would allow us to archive the website in a way that maintained its interactivity, required minimal intervention on our part in order to record the pages and the artworks, and could be made available for researchers almost immediately.26

We decided to use Rhizome’s Conifer tool to carry out the web archiving.27 In the context of digital art, Rhizome has supported the creation, curation and digital preservation of internet-based art since its inception in 1996. In 1999 they launched ArtBase, an online archive of digital art, and in 2019 published The Art Happens Here: Net Art Anthology.28 With the internet being a live, changing thing, placing it in an archival context is to take a kind of ‘snapshot’. Conifer’s focus is capturing ‘dynamic web content’ such as embedded video, Flash, audio and complex JavaScript thus enabling more of this ‘liveness’ to be captured. It is effectively a web crawler but requires active engagement from the user who sets the boundaries and parameters of the capture as they click through the links and pages. The tool is simple to use and does not require building a technological infrastructure like some larger web archiving initiatives. Archived websites can be downloaded and stored in the WebARChive (WARC) format on a digital preservation system.29 Rhizome also offers a desktop version of Conifer where captures can be accessed offline, but this would limit researchers to accessing these records on Tate’s onsite computers in the reading rooms. While the intention is to make the captures available this way, by utilising Rhizome’s cloud storage, at the time of writing the intention is to include a link in our archive cataloguing, keeping this archived version of the Intermedia Art microsite readily available online.

The process

Fig.4

Part of the spreadsheet created by the archives and TiBM teams to log each artwork and element of the website

© Tate

First, we created a working excel spreadsheet that mapped the website (fig.4). The spreadsheet allowed us to track what was working, understand how the web pages relate to one another, how they can be navigated and what is accessible via the UK Government Web Archive captures. The Intermedia Art homepage links to each of the artworks, the supporting programme (events, discussions, podcasts) and contextualising texts. Within each artwork there are links to the contextualising texts, but also to artists’ biographies. These pages and the artworks themselves also include external links outside of the Intermedia Art microsite. This mapping also logged which works are still working and accessible, the technologies each work is reliant on, and whether they were on the Tate server or one hosted by the artist.

Fig.5

Screenshot of the web archiving of Golan Levin’s The Dumpster 2006 in an emulated browser

Source: Rhizome

Digital image © Tate

Using this document, we decided to group the collections into artworks, events, interviews and texts. We would navigate the website by following each link from each of these pages, which would result in some links being captured more than once but allowed us to break the work down and track progress. Conifer works by copying the URL to be archived, and the content (including dynamic content as you press play) is captured as you navigate the website. Conifer also allows the option to record in emulated historical browsers that still support obsolete technologies such as Java applets, which were smaller Java applications embedded into a website. As the recordings were made before Flash was discontinued, we quickly learnt the importance of the browsers used for recording and the settings, including Flash being enabled. For example, it was not possible to record works like Golan Levin’s The Dumpster 2006 in a contemporary browser.30 In order to experience the artwork, these works had to be recorded in an emulated browser which supported Java applets (fig.5). The Conifer tool allows us to take a step backwards and still access the works that do cannot be experienced without emulated browsers.

Each individual link needs to be clicked to ensure it has been captured. Each one is then added to your ‘collection’. The capture does not replay like a screen recording but is an interactive version that allows users to continue to navigate those pages captured as they wish. If pages or links are missed in a recording session, these can be ‘patched’ to ensure complete captures. For the Intermedia Art microsite, each artwork and event has been recorded as its own individual collection, and includes links to the contextualising texts and biographies.

Setting the boundaries of what to capture returns to an earlier point about the Intermedia Art microsite representing the traditional documentation of a commissioning programme at Tate. The complex inter-relationships of information on the website led me to look at the retention schedules for exhibitions and displays in order to establish the parameters of what to capture. Comparative paper records such as press releases, events, recordings, interpretation and catalogue essays would be transferred to Public Records at the end of the exhibition and retained permanently. Several artworks such as Susan Collins’s Tate in Space 2002 and Heath Bunting’s BorderXing Guide 2002 are an extensive series of linked pages, and the interviews and contextualising texts also include links outside of the Intermedia Art microsite and the Tate URL.31 For the capture, we decided that we would follow all links one level outside of the Tate URL. Our rationale was that this was part of the artwork as decided by the artist and should be included.32 However, it was decided that we would only capture the first page of any external websites linked from the artworks or contextualising texts. Researchers could make a note of these external links and seek further information if necessary – the paper-based records of a commissioning project would include final drafts of publications and would not collect the research material referenced.

Evaluating the process

As the first website to be archived in-house, we are testing the parameters of valuable information and identifying the viability of the Conifer tool for this purpose. This process was one of experimentation that allowed space for change as it unfolded. It also allowed space for mistakes and reflection, with the intention to develop a methodology that can become part of Tate’s established archival practice.

As mentioned above, works like BorderXing Guide and Tate in Space are comprised of complicated networks of links. In order to capture those works which contain a large number of links into your ‘collection’, each individual link has to be clicked, and while Conifer allows users to patch missed links into the collection, there isn’t – or wasn’t at the time of use – a tool that allows the user to check that all links have been captured. As a team we attempted to find a tool that would map the site and all the internal links that we could use as a checklist.

Fig.6

Screenshot of a Screaming Frog recording of Graham Harwood’s Uncomfortable Proximity 2000

Source: Screaming Frog

We used Screaming Frog, a web crawler and search engine optimiser (SEO) tool, that also generates a URL site map.33 We tested this on a selection of artworks, including BorderXing Guide, Tate in Space and Graham Harwood’s Uncomfortable Proximity 2000. Harwood’s work was originally an intervention on the Tate website that plunged every third visitor into a ‘mongrelised’ version of the website that showed an alternative history of Tate. The work now exists now as a digital scrapbook of images and texts about each ‘Mongrel’ site which is what the Screaming Frog map (fig.6) demonstrates. The comparison between artworks was interesting as it allowed us to see that not all the links within BorderXing Guide and Tate in Space could be captured by the tool. The free version of Screaming Frog limits the number of URLs the tool returns, and these works exceeded this number, showing just how large the Intermedia Art microsite is. Continued investigation revealed that works which remained on the artist’s server such as BorderXing Guide meant that the artwork URLs were not distinct from other content on Bunting’s website and so the capture exceeded the capacity available on the free version of the tool.34 This is something we are continuing to try and understand, but with limited time to learn how to use an additional tool, we proceeded to capture and check the links via the Conifer tool, which was time consuming but effective. This involved double checking captures and ‘patching’ in missing links manually. Since undertaking the web archiving exercise, Conifer has launched an autopilot feature for ‘popular web platforms’ (including social media websites like Twitter and Instagram) that mimics the usual behaviours of that webpage and may potentially speed up the process.35 The feature currently only mimics scrolling behaviours rather than working through links, but it is clear to see how this would be beneficial should a user decide to retrospectively collect their Instagram or Twitter feeds.

Conclusion

From an archival perspective, this moment of research has been triggered by one key factor – loss. The missing institutional records about the original project made the Intermedia Art microsite more valuable than may have initially been anticipated. Coupled with the knowledge that many of the artworks were dependent on software that is rapidly expiring, and discussions about the secondary server being decommissioned, this case study was driven by a palpable sense of loss, both actual and potential.

The internet was the key component in this moment of institutional practice; the Intermedia Art microsite was a vehicle for both the exhibition and interpretation of these artworks, and the records – even the reconstituted ones – needed to reflect this. Collaboratively we agreed that in the context of the records we held the captures of Tate’s website and the net art commissions held in various internet archives were not enough. They captured their earliest form, embedded on the homepage; later, they captured the ‘newly’ launched Intermedia Art microsite in 2008; and even later the final version, last updated in 2012, with which we became most familiar. However, expiring dependencies put the content of the microsite at risk. The systematic check of each component of the website and web archive captures demonstrated that the crawlers did not capture the dynamic content of the artworks or the video interviews and, as browsers continue to update and software no longer continues to be supported, much of the content would be lost. Rhizome’s Conifer offered us a tool that would allow us to archive the Intermedia Art microsite as we came to find it, maintaining as much of its interactivity as possible, and thus its integrity and authenticity as an archival record.

While this text is not an exhaustive investigation into the capabilities and changing methodologies of web archiving, it is an insight into how we have navigated this potential for loss while testing Tate’s ability to extend its capacity to collect internet art and websites to its archive and institutional record keeping practices. The research also opened up opportunities for institutional collaboration, experimentation and potential changes in practice. The original Intermedia Art microsite, as well as Tate’s other microsites, will stay online as long as the secondary server remains active. The Conifer captures will be downloaded as WARC files into Tate’s digital preservation system and made accessible via the public record catalogue, both online and onsite in Tate’s archive reading room. This research will also be presented to the Public Records and Tate Archive teams as a foundation for collaboratively developing an in-house web archiving strategy at Tate.